‘The Creator’ VFX Supervisors Talk AI, New Technology & ILM’s Involvement

Oct 2, 2023

The Big Picture

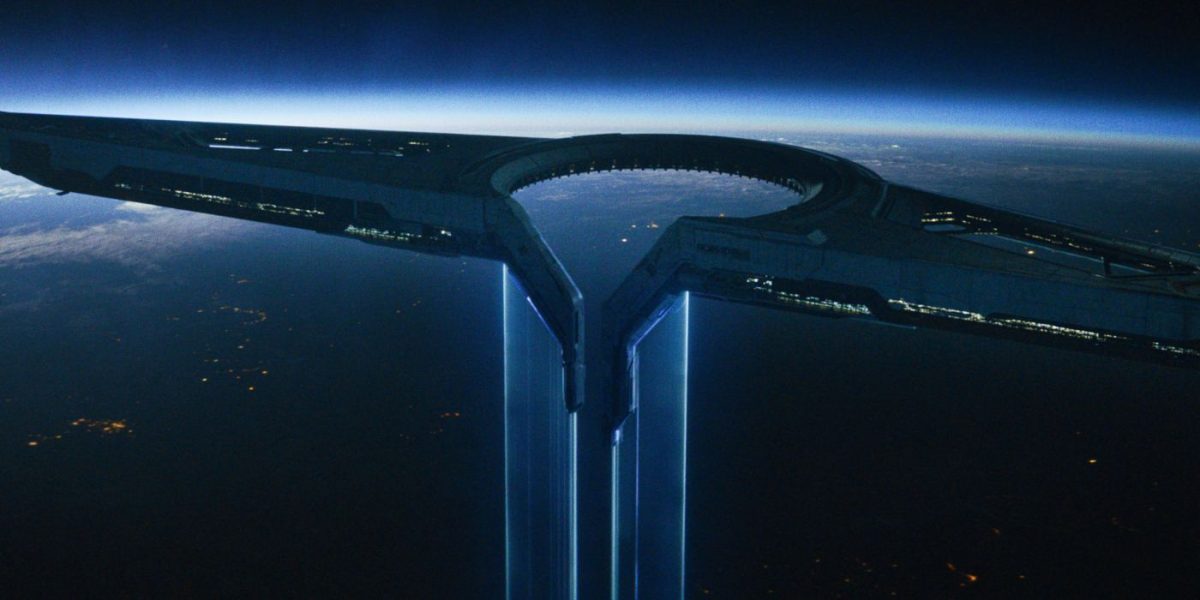

Gareth Edwards utilized his filmmaking techniques perfected since his first indie feature, Monsters, to create The Creator. Industrial Light & Magic aided in achieving Edwards’ radical vision on a budget. ILM visual effects supervisors Jay Cooper and Andrew Roberts played crucial roles in bringing The Creator’s dystopian future to life by integrating visual effects in post-production and on-set. The film stars John David Washington as Joshua, an ex-special forces agent, in a grim future where he must destroy an AI child weapon created by The Creator. The film was shot in eight countries and 80 different locations with stunning visuals.

In Gareth Edwards’ (Rogue One: A Star Wars Story) The Creator, the director utilizes filmmaking techniques he’s honed over the years since his first indie feature, Monsters. Starring John David Washington and an ensemble cast, this sci-fi epic’s journey to the big screen has the potential to change the game in Hollywood, and Edwards didn’t do it all alone. With the help of George Lucas’ renowned visual effects company, Industrial Light & Magic, this dystopian future is breathtaking on a budget, but achieving Edwards’ radical vision would require on and off-set diligence.

Enter ILM visual effects supervisors Jay Cooper and Andrew Roberts. In order to flesh out—or mechanize, rather—the world of The Creator, one set in a grim future where an army of artificial intelligence is warring against mankind, Roberts would be the on-the-ground ILM supervisor, while Cooper’s job took place in post. In the film, Washington plays Joshua, an ex-special forces agent tasked with locating and destroying a world-ending weapon designed by The Creator, the mind behind the AI. It turns out the weapon is an AI child, Alphie (played by Madeleine Yuna Voyles), and suddenly, Joshua isn’t so certain about his mission. The Creator also stars Gemma Chan, Allison Janney, Ken Watanabe, and Ralph Ineson and is filmed across “eight different countries, 80 different locations” with gorgeous visuals.

During an interview with Collider’s Steve Weintraub, Cooper breaks down his job from post, while Roberts explains the duties of the on-set VFX supervisor. They share their own personal views on real-life AI in the industry, the challenges faced to achieve Edwards’ vision, and whether doing it this way is more efficient or more difficult. The duo discuss new developments in their field, what they hope to see even more developments on in the future, and what about their part in The Creator would surprise viewers. Check out all of this and more in the full transcript below.

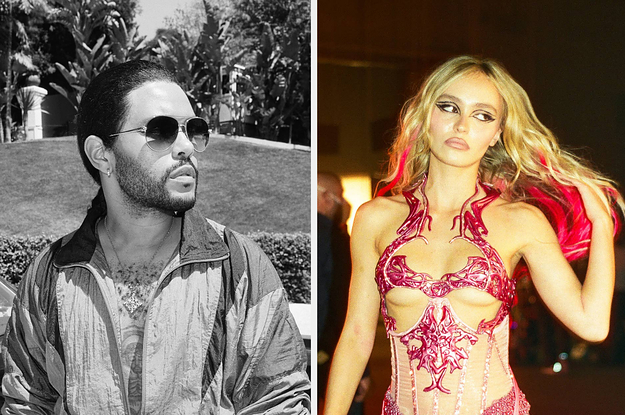

Image via Disney

COLLIDER: First of all, let me start by saying you guys did fantastic work on this film, and it’s great to talk with both of you. Before I jump into The Creator, one of the things that’s going on right now in the industry that everyone is talking about is AI, and I’m curious for the two of you: do you view AI as an existential threat to VFX or do you view AI as something that actually could benefit the industry in some ways with human control?

JAY COOPER: I’ll say this very clearly: it’s not going anywhere. It’s definitely here to stay, and we are using it in our pipeline, or it’s leaking into our pipeline. It’s funny, it’s like the exosuit, right? It’s a way for us to be more impactful with human effort. So, it’s leaking into our layout world and pose estimation, for facial understanding, for image clean up, texture synthesis, and things of that nature, but it’s not going to be replacing humans. At the core of what we do are great artists making good decisions and receiving feedback. I mean, I’m in this space pretty deep; there’s very, very little way to influence these AI tools after you get an initial representation of whatever we’re asking for. So the the thing that we bring to the table is understanding what the ask is, interpreting it, and then evolving as we’re giving feedback. And right now, there are no tools that are like that. I don’t see those any time soon. So for us, to me, it feels like it’s a bigger hammer, you know, and it’s able to make us be more effective or punch harder or whatever, but I don’t see it as an existential threat.

ANDREW ROBERTS: I would agree. I mean, machine learning is fascinating, what one can do with those tools, but it really is about artists. From The Creator and any other product that ILM works on, it’s about having talented artists translating the vision of the director and the production designer, working from what we capture on set, and then we can turn it into final shots.

It’s very similar, I guess, if you ask someone to write something in AI. You can’t really say, “Tweak these little things.” It’s, as you said, one large hammer that just comes down.

COOPER: I don’t know if you spend any time doing it, but it’s incredibly difficult to nudge. If you have a small request in the AI world, every single time you ask it, you’re starting from scratch, at least with the tools that I’m familiar with.

Image via 20th Century Studios

Jumping into why I get to talk to you guys, this is, to me, a revolution in filmmaking, what Gareth did. It’s so different. Can you talk about that difference, that having just a small crew on set and doing everything in post?

ROBERTS: It certainly was a smaller footprint. I was the onset visual effects supervisor, so there was a lot of planning. There were lots of conversations. It wasn’t that visual effects wasn’t considered during the shoot. We went through storyboards, there were a lot of in-depth preproduction meetings, and then each morning, I would meet with Gareth and with Oren Soffer, our DP, and then our first AD, and look at, “What are the shots up for the day? Which ones are going to be heavier lift from a visual effects point of view?” And I would make clear, “Okay, I’m gonna need to capture the lighting information here. I’m gonna need to make sure we get those measurements. Give me time for these things.” It would be less than I would typically have on more of a traditional visual effect set, but knowing sort of the absolute musts and then getting out of the way and allowing Gareth to really focus in on characters was the call of the day. So yeah, it was definitely a more nimble, lighter set. Gareth didn’t want a huge video village that he would then have to avoid when he was shooting at times.

If you had an overhead view of Gareth filming, there was a snake behind him with the various people just sort of shifting out of the way because he really wanted that freedom to reframe. They’ll be shooting this way on Alphie and Joshua, and then he would tell them, “Reset,” and now he swings the other way to capture a close-up on one of the other characters, and everyone would get out of the way because he often used the phrase, “I don’t want the tail wagging the dog. I’m shooting a film, and I want all of these elements to support what I’m doing, and I just really want to focus on what’s on camera.”

COOPER: We had conversations in our first weeks that if someone came up to the set, he wanted them to mistake it for a student production, right? That was sort of the footprint that he was aspirationally hoping for. Now, I don’t think it really got that small. Certainly, Andrew could speak to that. There’s a lot of work that went into it, a huge operation, but that was sort of the aspirational goal. And I think the reason why that was an aspirational goal is that he wanted to find a way that he could sort of have the best of the movies that he’s worked on. He wanted to have the flexibility from Monsters with the budget of Rogue One in terms of post. So that’s sort of a spirit that we launched into this endeavor, to have a smaller footprint on set where he had some room in post to sort of expand the already very impressive visuals that he acquired, like the photography at eight different countries, 80 different locations, and then be able to play with it in the computer and play with it in post, where we could try a bunch of different things and work with James Clyne, our production designer, to build out these enormous worlds and to take the best of what was in the plates and expand them out.

Image via 20th Century Studios

Yet to me, reading that there were no motion capture suits or tracking markers on set or things that I associate with movies like this…Do you think this movie could have been made five years ago, or is it only recent tech that has allowed it to be where it is right now?

COOPER: I think yes, it could have been made five years ago. It would have been a lot harder. Everything in visual effects really builds on the experience of something we’ve done previously. So speaking for the simulants, for example, we had a number of tools that we were using that we had developed for The Irishman, that we had developed for some of the face swap work that we had done on Mandalorian. So, it’s like a pyramid. Even to the extent of our artists, our artists have done similar things in different ways, so we’re constantly innovating and expanding our horizons. But a lot of what we do, the way we try to work as a company, is we’re sort of pushing the peanut uphill repeatedly based on the production that came before it. So, any success we had is not only due to what was shot on set but the previous work that we’ve done at ILM.

For both of you, what is the next hurdle in VFX that you’re hoping to be able to solve, or is there something going on behind the scenes, that thing that you’ve always tried to get…

COOPER: The great white whale.

Yeah, exactly.

COOPER: I think there’s a lot that’s done in the facial performance space and synthetic humans and things like that, trying to find a toolset that is more democratized and less super specialized. I think that’s something that we’re looking at a lot. There’s a lot of interesting stuff out there. There’s a lot of work on, I don’t know if you’re familiar with NeRFs or Gaussian Splatting. There are all these really interesting ways to read that are machine learning adjacent, where you basically can acquire footage and then re-navigate it in post-production. Those are some sort of exciting emerging technologies. There’s a lot of machine learning stuff where we’re talking about neural rendering, where you can sort of shortcut some of the rendering process and reduce your render time significantly that’s really interesting. There’s a lot of really cool stuff out there. I started a long time ago when we were sort of making the transition between physical models and computer models, and I feel like we’re at another kind of inflection point where there’s some really exciting technology that’s going to be unearthed in the next five years or so.

ROBERTS: On the technology side, I think leveraging real-time engines. Looking forward to a point where a director is able to get more of a sense at the moment of capture, like what might the final shot be like? And we’ve seen examples of that where they’re able to frame and see like, “Okay, well, here’s my character, and these are the added elements that will end up going in post.” And so some of that real-time feedback, I think, is exciting to see where that’s going.

Image via 20th Century Studios

Was there anything that Gareth asked you guys to do that actually reached a technical limitation where you’re like, “We actually can’t do this?”

COOPER: I don’t know if there’s anything we couldn’t do. Usually, if we put enough heads together, we can kind of figure out everything. There are some things that are tricky. I mean, there are times when we’re simulating hundreds of robots in that crusher; that’s kind of technically difficult, where they all have to interact against one another, and becomes computationally expensive. There’s a huge amount of rigid body destruction, both on the bridge that blows up and the nomad destruction and massive explosions and breaking apart robots and things of that nature. But yeah, I think most of it, we were sort of aware of how to do it.

Another difficulty on an aesthetic and a technical sense was, for our simulants, how do you sort of commingle soft bodies of people’s heads against rigid hardware, right? So, you have these adjacencies where a jiggly part of Ken Watanabe’s chin has to sort of connect and be anchored into his metallic parts. So we developed some tools on how to lock certain things down and then bleed out the amount of movement that those parts of his face that have to move around would, and anchor them to the metal. That was sort of a difficult thing to do. And ultimately, that was really important because your eye goes to the simulants, and if you’re ever bored for a second, you’re always sort of studying that effect and trying to figure out how it all works together.

Image via 20th Century Studios

I’ve seen the film twice, and yeah, I was examining things on my second viewing. For both of you, what do you think would surprise fans of Hollywood movies with big VFX to learn about making movies like The Creator or other things?

COOPER: To me, it’s who is a robot and who is a simulant? For the core characters, we knew who those were right out the gate, but some of them were chosen pretty late in the game. I think that from a filmmaking perspective, making decisions later, once you have a cut together, and making choices based on what’s gonna be the most impactful, that was something that was a little different for me, as well as something that I think it ultimately serves the movie. The later you can make some of these choices, where you know exactly what you’re up against…and that extended to cars, too. Things that are sort of that trivial, like, “Oh, we started on this big wide, and this car, this car, this car, they don’t look like they’re modern. Let’s come up with designs for them.” And so we would work with James, and he would design new backs of the cars, and we would try to figure out how we could do it as cleanly and cheaply while still having a huge amount of visual impact. That kind of thing is always a fun gag, and I think it plays to Gareth’s strengths. I mean, this is sort of how he made Monsters. He traveled to South America, and he’s shooting this movie, and then he’s trying to figure out how he can wedge in the shots to push up the production value. The only difference is that this time, he had ILM.

Image via 20th Century Studios

Exactly. It wasn’t in his bedroom.

COOPER: No, not in his bedroom.

ROBERTS: I think people will be fascinated to see when we get into the behind-the-scenes how integrated the live-action environments were to the visual effects. The huts and different structures that were created and added that he captured so much in camera, and then what was built on top of that to really create this new world.

The Creator is now playing in theaters and IMAX. For more on the film, watch our interview with director Gareth Edwards below.

Publisher: Source link

Channing Tatum Not Happy About Jenna Dewan Legal Battle

Channing Tatum Not Happy About Jenna Dewan Legal Battle Back in 2019, Channing and Jenna were declared legally single after separating the previous year. They were married for a decade and share one child, Everly, who was born in 2013.…

May 3, 2024

How RHONJ’s Melissa Feels About Keeping Distance From Teresa

Though the new season hasn't even debuted, Melissa indicated she's already excited about the reunion. "There's so much that needs to come out, and that's the place to do it," she said. "I mean, there's so many rumors and things…

May 3, 2024

15 Nightmare Audition Experiences Actors Shared

She said that, at a film festival several years down the road, a drunk producer told her, "Oh, Thandie, I've seen you recently!" She said, "And he lurched away, looking really shocked that he'd said that."Her husband, Ol Parker, spoke to…

May 2, 2024

Richard Simmons Defends Melissa McCarthy After Ozempic Comments

Ozempic is no laughing matter for the comedian. Handler revealed her "anti-aging doctor" prescribed her the medication without realizing what the drug was. "I didn't even know I was on it," she said during the Jan. 25, 2023 episode of Call…

May 2, 2024